Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

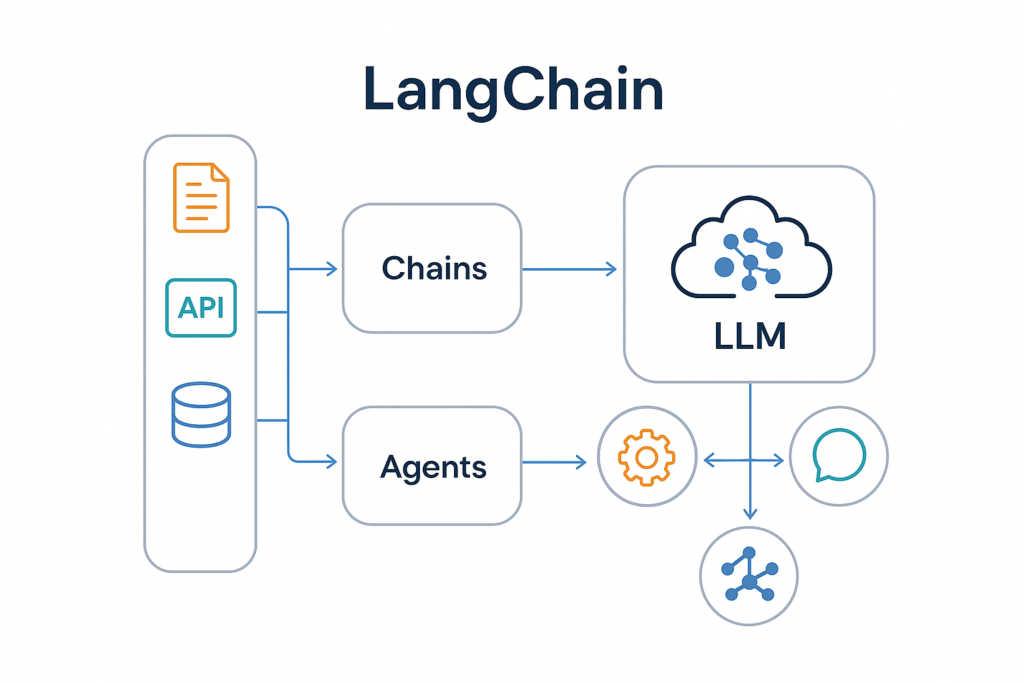

What is LangChain, and why is everyone talking about it in the world of AI? If you’ve been exploring Large Language Models (LLMs), you already know they’re incredibly powerful — but making them work for real-world tasks can feel like juggling too many pieces at once. Data sources, prompts, memory, and APIs all need to talk to each other, and without a good system, your workflows can get messy fast.

That’s where LangChain comes in. Think of it as the friendly middle layer that helps you connect all the dots. Instead of manually coding every single step, LangChain lets you chain prompts, tools, and models together, so your LLM can research, analyze, generate, and respond automatically.

Why does this matter? Without an orchestrator like LangChain, you might waste hours building ad-hoc scripts, debugging broken integrations, or repeating tasks manually. That leads to inefficiency, higher costs, and slower results — exactly what you don’t want when you’re building with cutting-edge AI.

In this guide, we’ll explore what is LangChain, why it’s becoming the go-to framework for developers and businesses, and how you can use it to build powerful, automated content workflows. By the end, you’ll know exactly how to connect LLMs to your data, design multi-step workflows, and turn complex ideas into seamless, scalable solutions.

If you’ve been wondering what is LangChain, think of it as a toolkit that helps you get the most out of Large Language Models (LLMs). LLMs are smart, but by themselves, they just respond to single prompts. LangChain takes things a step further by connecting those prompts together, letting your model perform multi-step tasks.

At its core, LangChain is designed to connect LLMs with external data sources, APIs, and tools. This makes it easier to build workflows that don’t just answer a single question, but handle an entire process from start to finish. If you’ve seen a langchain tutorial or explored langchain github, you’ve probably noticed how developers chain prompts, call APIs, and even search their own documents all in one flow.

Here are some of LangChain’s most powerful features:

Why does this matter? Instead of writing manual scripts or repeating the same steps, you can use LangChain to automate the heavy lifting. Whether you’re working with langchain python or langchain js, you can scale your AI projects faster and with less effort.

If you’re still wondering what is LangChain and why everyone seems to be talking about it, the answer is simple — it saves time, money, and effort. Businesses and developers alike are turning to LangChain because it makes working with Large Language Models (LLMs) much easier. Instead of writing endless custom code, you can build workflows that are fast, repeatable, and scalable.

For businesses, LangChain means efficiency. You can automate research, generate reports, and build chatbots without hiring a full data science team. This cuts costs and speeds up project delivery. Teams can focus on strategy instead of getting lost in technical details.

For developers, LangChain offers a simple abstraction layer. You don’t need to reinvent the wheel every time you connect an LLM to a new tool or data source. If you’ve ever followed a langchain tutorial or browsed langchain github, you know how quickly you can spin up prototypes and experiment.

Content teams love LangChain because it helps them automate repetitive work. You can summarize documents, cluster keywords, and even generate first drafts for SEO content. And since langchain python and langchain agents are easy to customize, you can fine-tune outputs to match your brand’s tone and goals.

Here’s a quick comparison:

| Without LangChain | With LangChain |

|---|---|

| Manual prompt writing for every task | Automated, connected chains of prompts |

| Disconnected tools and APIs | Unified workflow with agents selecting tools |

| Slow experimentation and debugging | Faster iterations, easy testing |

| Higher costs for repetitive work | Lower costs through automation |

LangChain doesn’t just make life easier — it changes how you think about building AI-powered solutions. Once you try it, you’ll never want to go back to manual workflows.

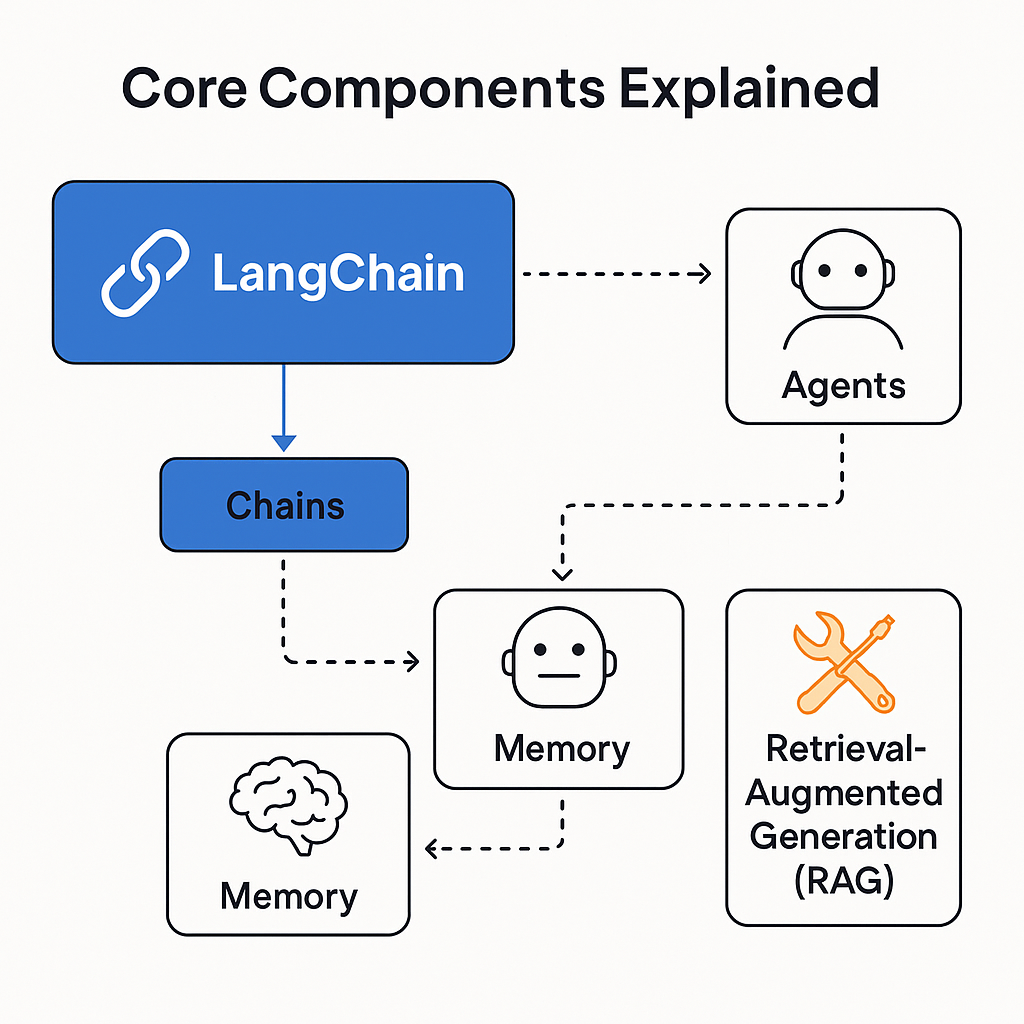

When people ask what is LangChain, the easiest way to explain it is by breaking it down into its main building blocks. Each part has a clear role, and when combined, they create smooth and powerful workflows.

Chains are the heart of LangChain. They are step-by-step instructions that guide your LLM to complete a task. A chain can be as simple as asking for a single response or as complex as running through multiple steps in order.

For example, imagine you want to summarize a long document and then turn that summary into social media posts. A single chain can first tell the model to summarize the text, then use that output to generate three short posts for Twitter or LinkedIn. This saves time and keeps everything consistent.

Langchain agents add flexibility by letting the model decide which step to take next. They act like a smart assistant that chooses the right tool or action based on what the user needs.

For instance, if someone asks a question about a company policy, an agent can decide to search the internal knowledge base, read the answer, and then respond in a clear way. This is why developers love building with langchain python — it feels like giving your AI a brain that can make decisions on the fly.

Tools are what make LangChain so versatile. They allow you to connect your LLM to almost anything — a database, an API, or even a web search. You can use built-in tools or create your own.

Some popular combos include LangChain + OpenAI for natural language tasks, LangChain + DeepSeek for research-heavy workflows, and LangChain + Ollama for running models locally. A quick look at langchain github shows just how many integrations the community has built.

Memory gives your workflow context. It lets the system “remember” what was said earlier and use it later in the conversation. This is essential for chatbots or assistants.

A good example is customer support. Memory allows the assistant to recall previous messages, so users don’t have to repeat themselves.

RAG is a powerful feature that allows the model to look up information before generating an answer. This ensures responses are accurate and based on the latest data.

For example, a RAG-powered system can pull up recent news or updated documents before creating a summary. This is one reason why so many langchain tutorial projects focus on building RAG pipelines — they make answers smarter and more reliable.

Now that we’ve covered what is LangChain and its core components, let’s look at how you can use it to build real-world workflows. These ideas work whether you are just starting with LangChain or following a langchain tutorial from langchain github to experiment with new features.

Instead of manually gathering information from multiple sources, you can create a chain that performs research, extracts key points, and summarizes them.

Example Workflow:

Pseudo-code (langchain python):

from langchain.chains import SimpleSequentialChain

from langchain.prompts import PromptTemplate

from langchain.llms import OpenAI

search_chain = ... # Your search API chain

summary_prompt = PromptTemplate(input_variables=["text"], template="Summarize this text:\n{text}")

summary_chain = LLMChain(llm=OpenAI(), prompt=summary_prompt)

overall_chain = SimpleSequentialChain(chains=[search_chain, summary_chain])

print(overall_chain.run("AI trends in 2025"))

This can save hours of manual research and ensure you never miss key points.

You can use LangChain agents to generate detailed content briefs, including title suggestions, outline structure, and key talking points.

Example: Create a brief for “AI workflow automation” with suggested H2s, FAQs, and word counts.

LangChain can group keywords into topic clusters, making your content strategy more organized.

Example:

This is a favorite use case for content marketers because it removes guesswork from topic planning.

You can combine chains to write first drafts optimized for SEO.

Example:

This is where langchain python shines because you can customize the prompts and refine outputs quickly.

LangChain makes it easy to build translation workflows that preserve tone and style.

Example:

This is great for global businesses and saves money compared to manual translation.

Why stop at content creation? You can use LangChain to automate posting across multiple platforms.

Example:

With langchain agents, the system can decide which format is best for each channel.

You can feed your analytics data into a LangChain workflow and get plain-language insights.

Example:

This saves hours of manual number-crunching and gives you actionable insights fast.

LangChain Tutorials – Step-by-step guides for beginners.

LangChain GitHub – Explore examples and community projects.

LangChain Documentation – Learn more about chains, agents, and tools.

If you’ve read this far, you know what is LangChain and why it matters. Now let’s get your first workflow running. The good news? It’s easy to start, whether you prefer Python or JavaScript.

For Python:

pip install langchain openai

For JavaScript/TypeScript:

npm install langchain openai

Once installed, you can explore examples from the LangChain GitHub repository or follow a simple langchain tutorial to get comfortable with the basics.

Here’s a quick example using langchain python to generate a simple response:

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

prompt = PromptTemplate(input_variables=["name"], template="Say hello to {name}")

llm = OpenAI()

chain = LLMChain(llm=llm, prompt=prompt)

print(chain.run("Human"))

Run this, and you’ll see your LLM say hello to “Human.” This is the simplest version of a chain — but from here, you can connect multiple steps, add tools, and even use langchain agents to make decisions dynamically.

Getting started is quick, and once you run your first chain, you’ll see why developers love this framework. Whether you are building content workflows, experimenting with retrieval-augmented generation, or connecting APIs, LangChain makes the process smooth and scalable.

Once you understand what is LangChain and start experimenting, it’s important to follow some good habits so your workflows stay efficient and reliable. Here are a few do’s and don’ts that will save you headaches later.

Skipping Testing: Test each chain individually before combining them. Langchain agents can behave unpredictably if you don’t check their output step by step.

Over-Engineering: Don’t make your chains too complicated too soon. Start small, test, and iterate.

Ignoring Token Limits: Always check token limits for your chosen LLM. Exceeding them can break your chain or lead to partial responses.

When you first learn what is LangChain, it’s natural to wonder how it compares to other frameworks. While LangChain is one of the most popular options, tools like LlamaIndex and LangGraph have their own strengths. Choosing the right tool depends on what you are building.

LangChain is great if you want a complete workflow framework that supports chaining prompts, using langchain agents, and integrating external tools. If you check langchain github, you’ll see a thriving community contributing new features and integrations almost every week.

Here’s a simple comparison:

| Feature | LangChain | LlamaIndex | LangGraph |

|---|---|---|---|

| Focus | Full LLM workflow orchestration (chains + agents + tools) | Data indexing & retrieval for LLMs | Graph-based workflows for structured reasoning |

| Ease of Use | Easy to start with langchain tutorial and langchain python code | Simple for retrieval tasks | Slightly more technical, better for advanced users |

| Community Support | Large and active (see LangChain GitHub) | Growing community, focused on RAG use cases | Niche but strong community |

| Integrations | Many built-in tools, API support, memory, RAG | Strong retrieval integrations | Good for experimental workflows |

| Best For | Complex content workflows, chatbots, agents | Search & knowledge-base applications | Graph-style logic-heavy pipelines |

If you’re just starting, LangChain is often the easiest to pick up. Once you get comfortable, you can experiment with combining LlamaIndex for document indexing or using LangGraph for special reasoning tasks.

The future of LangChain looks bright. As more people discover what is LangChain and start using it for real-world projects, its ecosystem is growing quickly. The framework has already gone from a simple idea to one of the most popular tools for building LLM-powered applications.

We’re seeing new integrations almost every month. Developers are connecting LangChain to more APIs, databases, and even local model runners like Ollama. This means you’ll be able to build faster, more secure workflows without reinventing the wheel. If you’ve explored langchain github, you’ve probably noticed how often new pull requests and examples are added — the pace of development is impressive.

Community-driven improvements are also shaping the future. People are sharing best practices, writing langchain tutorial guides, and contributing new tools. Features like memory, RAG, and langchain agents are becoming more polished and powerful thanks to feedback from real users.

Langchain python will likely keep evolving too, with more support for async workflows and cost tracking. The goal is to make it easier for both beginners and advanced developers to experiment, test, and scale their projects.

In short, LangChain isn’t just a tool — it’s becoming a foundation for the next generation of AI workflows. Now is the perfect time to start learning and building with it.

LangChain is an open-source framework that helps developers connect large language models (LLMs) to data sources, APIs, and tools. It allows you to build automated, multi-step workflows quickly.

Yes, LangChain itself is free and open source. You may still pay for the underlying LLM API calls (like OpenAI or Anthropic), depending on how much you use them.

You can build chatbots, content generation workflows, document Q&A systems, research tools, and even AI agents that decide which step to take next.

The best way is to check the LangChain GitHub repo, follow a step-by-step langchain tutorial, and try a simple example using langchain python to understand the basics.

For more guides, strategies, and tips, check out icebergaicontent.com.

Your article helped me a lot, is there any more related content? Thanks!